|

|

|

|

|

|

2150

Holodeck-style environments are becoming possible

The concept of virtual reality had been explored as far back as the 1930s, when Stanley G. Weinbaum wrote his short story, Pygmalion's Spectacles. Published in Wonder Stories – an American science fiction magazine – this described a "mask" with holographic recording of experiences including smell, taste and touch.*

In the 1950s, Morton Heilig wrote of an "Experience Theatre" that could encompass all the senses in an effective manner, thus drawing the viewer into the onscreen activity. He built a prototype mechanical device called the Sensorama in 1962 – which had five short films displayed in it while engaging multiple senses (sight, sound, smell, and touch). Around this time, engineer and inventor Douglas Englebart began using computer screens as both input and output devices.

Later in the 20th century, the term "virtual reality" was popularised by Jaron Lanier. A major pioneer of the field, he founded VPL Research in 1985, which developed and built some of the seminal "goggles and gloves" systems of that decade.

A more advanced concept was depicted in TV shows like Star Trek: The Next Generation (1987-1994) and movies like The Matrix (1999). These introduced the idea of simulated realities that were convincing enough to be indistinguishable from the real world.

It was not until the late 2010s that virtual reality became a truly mainstream consumer technology.* By then, exponential advances in computing power had solved many issues hindering previous, cruder attempts at VR – such as cost, weight/bulkiness, pixel resolution and screen latency. It was possible to combine these headsets with circular or hexagonal treadmills, offering users the ability to walk in a seamless open world.* The Internet also enabled participants from around the globe to compete and engage with each other in massively multiplayer online role-playing games.

While clearly a huge improvement over earlier generations of hardware, these devices would pale into insignificance when compared to full immersion virtual reality (FIVR). As computers became ever smaller and more compact, made possible via new materials such as graphene, they were beginning to integrate with the human body in ways hitherto impossible. Their components had shrunk by orders of magnitude, following trends like Moore's Law. Machines that filled entire rooms in the 1970s had become smartphones by 2010 and the size of blood cells by the 2040s.* This occurred in parallel with accurate models of the brain, establishing a basic roadmap of neurological processes.* Full immersion virtual reality leveraged these advances to create microscopic devices able to record and mimic patterns of thought, directly inside the brain. Tens of billions of these "nanobots" could be programmed to function simultaneously, like a miniature Internet, the end result being that sensory information was now reproducible through software. In other words – vision, hearing, smell, taste and touch could be temporarily substituted by a computer program, allowing users to experience virtual environments with sufficient detail to match the real world. First demonstrated in laboratory settings and military training environments, FIVR was commercialised in subsequent decades and became one of the 21st century's defining technologies.

Not everyone was amenable to having nanoscale machines inserted into their brains, however. In any case, full immersion VR provided only a superficial imitation of real life – it could not replicate every subatomic particle, for example, or the countless quantum events occurring at any given moment in time and space. Accounting for these phenomena would require a level of computing on a different scale entirely.

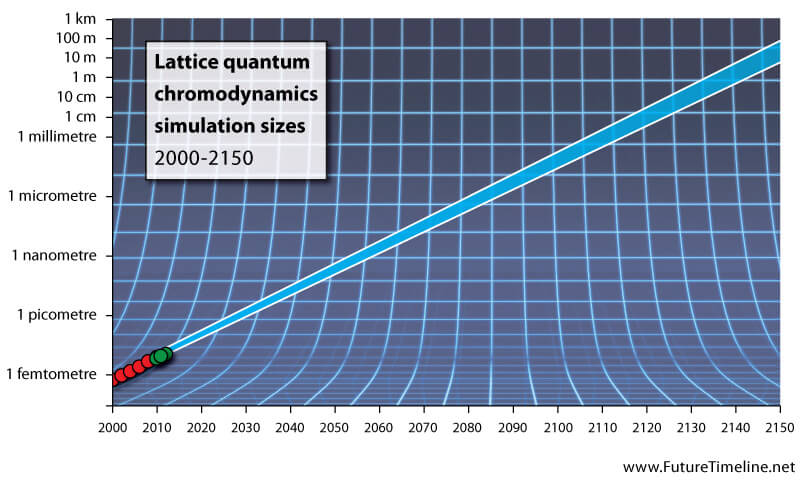

Lattice Quantum Chromodynamics (LQCD) was a promising field in the late 20th and early 21st centuries. This allowed researchers to simulate objects and processes in near-perfect detail, using resolutions based on the fundamental physical laws. By the 2010s, for example, individual proton masses could be determined at error margins close to one percent. During the 2020s, exascale computing helped to further refine the nuclear forces and uncover exotic "new physics" beyond the Standard Model.

Smaller and smaller pixelations were being applied to greater and greater volumes of space-time, as supercomputers later reached the zettascale, yottascale and beyond. By the 2070s, it was possible to simulate a complete virus with absolute accuracy down to the smallest known quantum level.* Blood cells, bacteria and other living structures followed as this technique approached the macroscale. In the early 22nd century, mind transfer became feasible for mainstream use, whole-brain scans now sufficiently perfected. Another milestone was passed by 2140, with a cubic metre of space-time being accurately simulated.**

These four-dimensional lattice grids were, in effect, miniature universes – fully programmable and controllable. When combined with artificial intelligence, matter contained within their boundaries could be used to recreate virtually anything in real time and real resolution. Spatial extents continued to grow, reaching tens of metres. Although highly convincing VR had been around for over a century, achieving this level of detail at these scales had been impossible until now. By 2150, perfect simulations can be generated in room-sized environments without any requirement for on-person hardware.

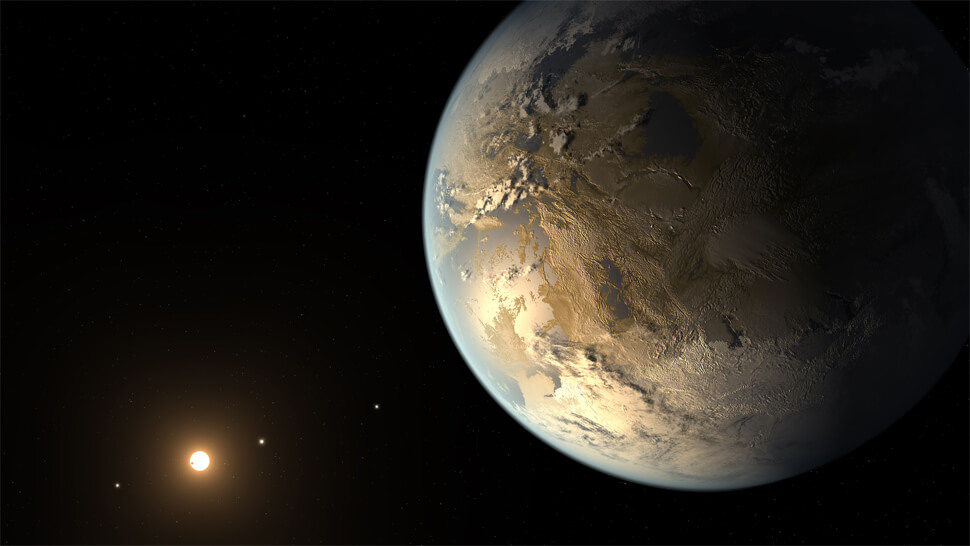

As virtual reality advances still further, entire worlds are constructed using the smallest quantum units for building blocks. This opens up some profound opportunities in the 23rd century. For example, artificial planet Earths can have their parameters altered slightly – gravity, mass, temperature and so on – then fast-forwarded billions of years to compare the outcomes. Intelligent species evolving on these virtual worlds may be entirely unaware that they are part of a giant simulation.

Hi-tech, automated cities

An observer from the previous century, walking through a newly developed city of 2150, would be struck by the sense of cleanliness and order. The air would smell fresh and pure, as if they were in pre-industrial countryside. Roads and pavements would be immaculate: made of special materials that cleaned themselves, absorbed garbage and could self-repair in the event of damage. Building surfaces, windows and roofs would be completely resistant to dirt, bacteria, weather, graffiti and vandalism. These same coatings would be applied to public transport, cars and other vehicles. Everything would appear brand new, shiny and in perfect condition at all times. Greenery would feature heavily in this city, alongside spectacular fountains, sculptures and other beautification.

Lamp posts, telegraph poles, signs, bollards and other visual "clutter" that once festooned the streets have disappeared. Lighting is achieved more discretely, using a combination of self-illuminating walls and surfaces, antigravity and other features designed to hide these eyesores, maximising pedestrian space and aesthetics. Electricity is passed wirelessly from building to building. Room temperature superconductors – having begun to go mainstream in previous decades – are now ubiquitous in every major city. These allow the rapid movement of vehicles without the need for tracks, wheels, overhead cables or other components. Cars and trains simply drift along silently, riding on electromagnetic currents.

Sign posts are obsolete – all information is beamed into a person's visual cortex. They merely have to "think" of a particular building, street or route to be given information about it.

This observer would also notice their increased personal space, and the relative quiet of areas that, in earlier times, would have bustled with cars, people and movement. In some places, robots tending to manual duties might outnumber humans. This is partly a result of the reduction in the world's population. However, it is also because citizens of today spend the majority of their time in virtual environments. These offer practically everything a person needs in terms of knowledge, communication and interaction – often at speeds much greater than real time. Limited only by a person's imagination, they can provide richer and more stimulating experiences than just about anything in the physical world.

On those rare occasions when a person ventures outside, they are likely to spend little time on foot. Almost all services and material needs can be obtained within the home, or practically on their doorstep – whether it be food, medical assistance, or even replacement body parts and physical upgrades. Social gatherings in the real world are infrequent, usually reserved for "special" occasions such as funerals, for novelty value, or the small number of situations where VR is impractical.

Crime is almost non-existent in these hi-tech cities. Surveillance is everywhere: recording every footstep of your journey in perfect detail and identifying who you are, from the moment you enter a public area. Even your internal biological state can be monitored – such as neural activity and pulse – giving clues as to your immediate intentions. Police can be summoned instantly, with robotic officers appearing to 'grow' out of the ground through the use of blended claytronics and nanobots, embedded into the buildings and roads. This is so much faster and more efficient that in most cities, having law enforcement drive or fly to a crime area (in physical vehicles) has become obsolete.

Although safe and clean, some of these hi-tech districts might appear rather sterile to an observer from the previous century. They would lack the grit, noise and character which defined cities in past times. One way that urban designers are overcoming this problem is through the use of dynamic surfaces. These create physical environments that are interactive. Certain building façades, for instance, can change their appearance to match the tastes of the observer. This can be achieved via augmented reality (which only the individual is aware of), claytronic surfaces and holographic projections (which everybody can see), or a combination of the two. A bland glass and steel building could suddenly morph into a classical style, with Corinthian columns and marble floors; or it could change to a red brick texture, depending on the mood or situation.

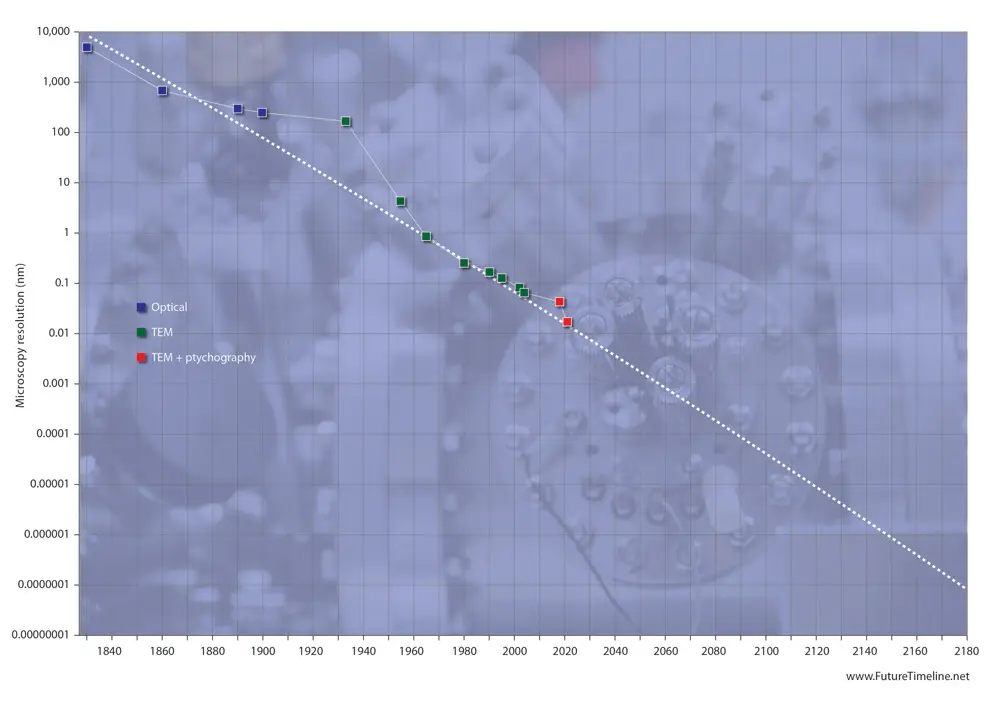

Protons and neutrons are visualised for the first time

From their inception in the 17th century, through to the present day, microscopes have revealed more and more of the unseen world, transforming our understanding of nature at scales too small for the naked eye. The pioneering work of Anton van Leeuwenhoek, using rudimentary lenses, first revealed the microscopic realm of bacteria and spermatozoa.

As the centuries progressed, traditional techniques reached their limit, resulting in the creation of electron microscopes in the 20th century. These new and revolutionary devices pushed the boundaries by allowing scientists to see individual atoms and intricate molecular structures.

However, subatomic particles remained elusive due to their incredibly tiny scale – orders of magnitude below even atoms – made yet more challenging by the peculiarities of quantum mechanics.

This quantum barrier proved to be a longstanding challenge. Attempts to directly observe particles such as protons and neutrons altered their very nature, due to a phenomenon known as the observer effect. To "observe" or "measure" a particle at the subatomic scale would typically require interacting with it in some way. This might involve using light (photons), for example, to detect it. But when a photon collides with a proton or neutron, it can impart energy to the particle, changing its momentum. In other words, the very act of trying to pin down a particle's location would alter its state.

Furthermore, a quantum system could exist in a superposition of states; meaning that, for instance, a particle could be in different positions simultaneously. Protons and neutrons – composed of quarks bound together by gluons – could also become entangled, meaning the state of one particle is dependent on the state of another, even over large distances. A measurement on one part of an entangled system could therefore influence the state of the other part, which could be viewed as another manifestation of the observer effect.

While even the greatest human minds of the 22nd century had struggled to overcome these challenges, artificial superintelligence (ASI) now dominated the world and brought forth a novel approach, marrying deep theoretical understanding with unprecedented computational prowess. These digital intellects began to design radically new and experimental setups, incorporating algorithms of almost unimaginable complexity and sophistication, able to account for – and even harness – the very quantum effects that had stymied direct observation for so long.

The ingenious methods of one world-renowned ASI allowed for indirect, yet precise interpretations of the states of protons and neutrons, without causing disruptive wavefunction collapses. In essence, this involved setting up quantum systems in ways that balanced measurement and interaction delicately, such that the inherent randomness of quantum mechanics was not a barrier, but a tool.

A milestone in microscopy has been reached by 2150, with protons and neutrons now visible for the first time. The culmination of recent research has been the introduction of Quantum Resonance Imaging (QRI). As the core component, this device cannot "see" in the traditional sense, but rather "senses" the quantum states of particles, translating them into visual representations.

These results are truly groundbreaking. For the first time, the behaviour of protons and neutrons can be witnessed in minute detail, unlocking whole new avenues in fundamental physics, nanotechnology, materials science, and quantum computing. While many further orders of magnitude improvement are required to reach the so-called Planck length – the absolute lower limit to the size of physical objects – a clearer picture of the fabric of matter is now beginning to emerge.

The strong force, for example, which holds protons and neutrons together in a nucleus, is now better understood, with researchers using this knowledge to control and manipulate atomic structures with unprecedented precision. This offers the potential for novel materials and states of matter.

Meanwhile, as neutrons can be studied in greater detail, astronomers and physicists are refining their models of neutron star behaviour, internal structure, and associated phenomena. Neutron stars, as their name suggests, are composed almost entirely of these particles. Aspects of neutron decay are also revealing phenomena beyond the Standard Model's predictions.

Following the visualisation of protons and neutrons, the next major landmark for microscopy will be the revealing of quarks, an even smaller class of particles that form the building blocks of protons, neutrons, and other hadrons.

2151

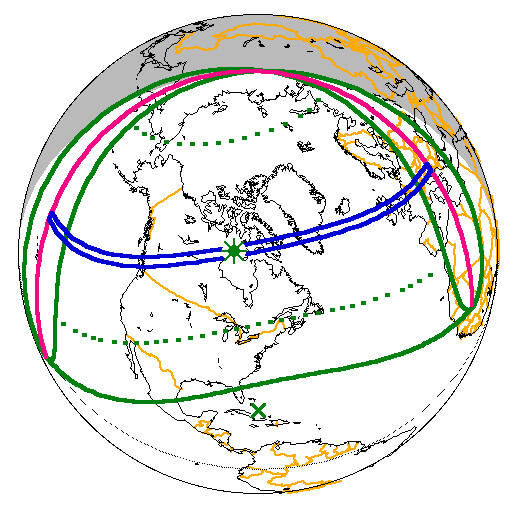

Total solar eclipse in London

A rare total eclipse takes place in Britain this year, with parts of London in totality.* The last time this occurred was in 1715; the next will be in 2600 AD.

Credit: NASA

2160

The world's first bicentenarians

Certain people who were born in the 1960s are still alive and well in today's world. Life expectancy had been increasing at a rate of 0.2 years, per year, at the turn of the 21st century. This incremental progress meant that by the time they were 80, these people could expect to live an additional decade on top of their original lifespan. However, the rate of increase itself had been accelerating, due to major breakthroughs in medicine and healthcare, combined with better education and lifestyle choices. This created a "stepping stone", allowing people to buy time for the treatments available later in the century – which included being able to halt the aging process altogether.*

2170

Underground lunar cities are widespread

In the second half of the 21st century, the emergence of low-cost space travel* made it relatively easy to access the Moon and its surface. This led to a surge of new explorers, entrepreneurs and cooperatives looking to make their mark on its undeveloped territories. Now, almost a hundred years after these early settlers, the lunar environment has become a hive of activity.

Lava tunnels – among the most sought-after locations – had formed in the ancient past by molten rock flowing underground, either from volcanic activity or the impact of comets and asteroids causing terrain to melt. These left behind enormous caves, with some of the largest found to be over 100 kilometres in length and several kilometres wide.

Telescopic observations, orbiting probes, and landers in the 20th and 21st centuries revealed more and more information about these regions. Eventually it became possible to send fleets of automated drones and other vehicles below the surface – producing detailed 3D maps with imaging techniques such as LiDAR* and muon tomography. The latter had been used with great success on Earth; to uncover hidden chambers in the Egyptian Pyramids, for example; and to detect nuclear material in vehicles and cargo containers for the purposes of non-proliferation; and to monitor potential underground sites used for carbon sequestration. Its usage on the Moon allowed deep scanning of tunnels at high spatial resolution.*

By 2100, the Moon's surface and subsurface had been thoroughly mapped, with a large and growing number of commercial operations being established to secure prime real estate.

Lunar lava tube on the Moon. Credit: NASA

The popularity of these underground sites owed in part to their shielding from radiation, micrometeorites and temperature extremes. Sealing of the "skylight" openings with an inflatable module, designed to form a hard outer shell, provided a further layer of protection – and the potential to create a pressurised, breathable atmosphere.

In addition to establishing a safe environment, a number of useful resources could be extracted from these depths. Titanium, for example, appeared in concentrations of 10% or more,* whereas the highest yields on Earth rarely exceeded 3%. The tunnels also contained rare minerals, which had formed as the lava slowly cooled and differentiated. In the polar regions, some tunnels led to and provided easier access to frozen water deposits.*

As the decades passed, the expansion of underground colonies began to accelerate. The initial settlements, containing the bare essentials in terms of food, water, oxygen production and habitat modules, evolved into towns with their own culture and identity.

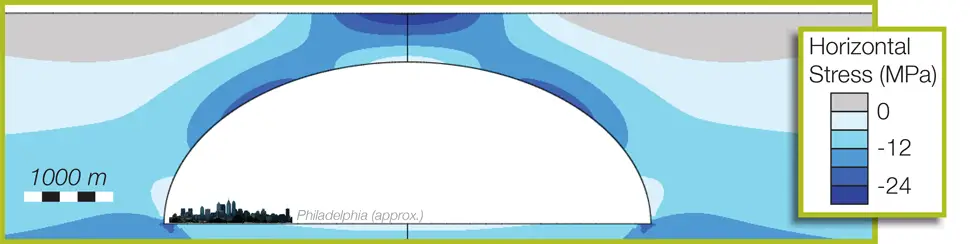

Structural engineers, having assessed the arch-like roofs overhead, found them to be stable even at widths of several kilometres.* On Earth, lava tubes were unable to form at such enormous sizes, but the Moon's lower gravity (0.16 g) and lack of weathering or erosion made this possible.

The Moon began to attract more and more residents, seeking a life away from Earth and the chance to form a new society. As a response to the environmental crisis and humanity's ever-growing footprint, a "deindustrialisation" movement had been gathering pace. This aimed to reduce the burden on Earth's ecosystems by "offworlding" many traditional production/extraction/manufacturing operations to the lunar environment.

By 2170* – two hundred years since humanity first set foot on the Moon – these lava tunnels are filled with entire cities, home to many millions of people and their robot/AI companions. Much of the infrastructure (including some very large supercomputers) has been imported from Earth, as part of the aforementioned deindustrialisation projects. Most of the original cave entrances, made from inflatable materials, have now been upgraded into fully-fledged airlocks for handling the rapid arrival and departure of large spacecraft.*

In some of the largest and deepest caverns, the lunar conditions allow for a number of gigantic science experiments. For instance, neutrino detectors are being built at scales and efficiencies unmatched on Earth,* taking advantage of the greater isolation from background interference. These are revealing profound insights into astronomical phenomena and the nature of the Universe.

Ongoing expansion of the "datasphere" – defined as the world's aggregate of generated and stored data – continues to drive the growth of computing and related technologies in the 22nd century. With more and more space required to house data centres and supercomputers, the Moon has become a major focus of activity in terms of fulfilling this need. Formerly separate cave systems are now interconnected, forming hyper-fast networks across a significant portion of the Moon's surface and subsurface.

2175

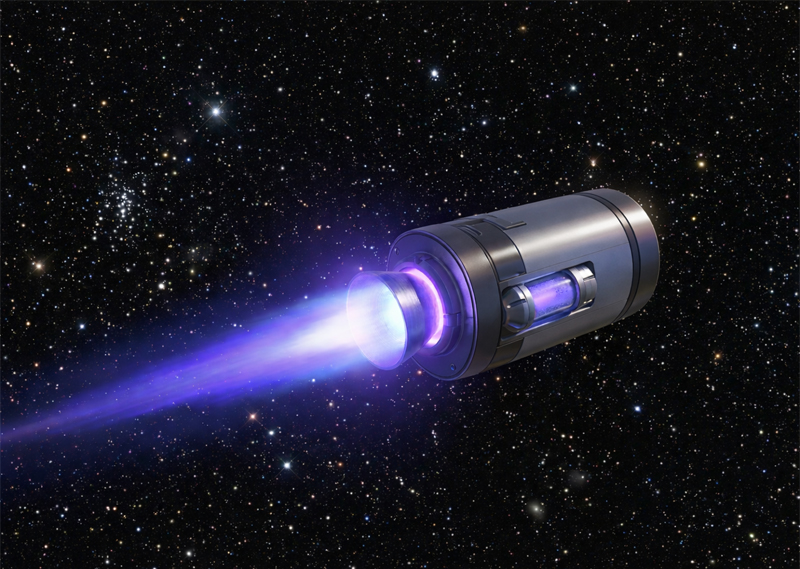

Interstellar probes are now a routine part of scientific exploration

By the late 22nd century, interstellar exploration is no longer a theoretical ambition, but a practical and steadily expanding field of research.* A new generation of deep space probes is accelerating to extraordinary speeds, now typically attaining between 10% and 30% of light speed depending on their engine configuration. Many of these arrive at their destinations within a few decades. The fastest and smallest can reach the nearest stars in less than a decade – an astonishing improvement on the earliest generations of spacecraft, when probes like Voyager 1 and 2 were destined to wander the galaxy for tens of thousands of years before encountering another star.

This progress is the result of two centuries of rapid advances in propulsion and energy technologies. Antimatter remains challenging to produce, but global output has multiplied by many orders of magnitude since the early 21st century. The world's largest antimatter facilities now generate kilograms per year, supporting a wide range of spaceflight and scientific applications.

Unlike the hybrid propulsion systems earlier in the century, which used antimatter only as a catalyst, modern interstellar probes now draw on antimatter as a primary energy source. Even a few grams of antimatter can deliver immense acceleration when paired with advanced containment, precise exhaust control and lightweight spacecraft structures.

Alongside antimatter-based propulsion, other designs continue to gain prominence. Fusion pulse drives, hybrid photonic sails and staged beam-pushed accelerators compete for different mission profiles. Most interstellar probes have masses comparable to those of microsatellite- and minisatellite-class spacecraft, carrying imaging and spectroscopy payloads similar in capability to early 21st-century planetary flagships such as New Horizons or Cassini, but vastly enhanced by advances in sensors, computing, and materials. A few of the larger flagship missions operate at scales comparable to smallsat-class spacecraft, using their greater mass budget to deploy clusters of micro-probes optimised for extreme speed or specialised measurements. Each functions as an autonomous laboratory, guided by powerful AI systems capable of self-repair, hazard avoidance and long-term decision-making without human oversight.

Travelling at a significant fraction of light speed makes even tiny grains of interstellar dust extremely dangerous, as their impacts can release enormous amounts of energy. To address this, modern probe designs combine active and passive protection. Forward-mounted plasma and particle systems create a stand-off region that ionises much of the incoming dust and gas, allowing electromagnetic fields to deflect a portion of it away from the craft.

Behind this, layered physical shielding provides a final line of defence. These structures serve as advanced Whipple-style systems,* using thin sacrificial bumpers to fragment and disperse surviving particles into a wider, lower-density spray before it reaches the main hull, which is engineered to absorb the remaining debris. Together, these layered approaches make relativistic travel far safer than early 21st-century studies suggested, though interstellar dust remains one of the most demanding engineering challenges of high-speed flight.

After traversing trillions of kilometres of interstellar space, the majority of probes conduct high-speed flybys of their target systems. Some of these missions release clusters of miniature orbiters, atmospheric probes and surface impactors during their passes, returning unprecedented images and scientific data from planets around Alpha Centauri* and Barnard's Star,* as well as detailed surveys of nearby stellar environments such as Wolf 359 and many other local stars. Slower missions use magnetic or beam-assisted braking methods to enter orbit, allowing longer-term study. Some probes also transmit long-baseline views of the Solar System itself, capturing Earth as a pale blue dot seen from light-years away and offering a new perspective on humanity's place in the cosmos.

Though still costly and complex, interstellar missions have become a routine part of international scientific planning. Each decade brings further exponential gains in antimatter storage, propulsion efficiency and shielding. The breakthroughs of the 21st century* – when laboratories first produced picograms of antimatter and interstellar travel remained firmly in the realm of speculation – now appear as the earliest sparks of a transformation that continues to unfold. Exploration beyond the Solar System is no longer a distant aspiration, but a frontier humanity steadily pushes outward, star by star.

2182

Close approach of the asteroid Bennu

In 1999, astronomers discovered 101955 Bennu, a near-Earth object (NEO) and member of the Apollo group of asteroids. Studies found it to be carbon-rich (carbonaceous), with a dark surface and low reflectivity, and measuring around 500 metres (1,640 feet) in diameter.

Bennu quickly became a focus of scientific attention due to the possibility of a collision with Earth. Orbiting the Sun every 1.2 years, it crossed Earth's path on a regular basis, making it a potentially hazardous object. Models of its trajectory indicated the highest cumulative rating for any object on the Palermo Technical Impact Hazard Scale – a logarithmic scale used by astronomers to judge the risk level of NEOs – with multiple close approaches predicted to occur in the 21st through 23rd centuries.

According to the simulations, Bennu would pass within 0.001 au (203,000 km; 126,000 mi) on 25th September 2135, or about half of the distance between the Earth and Moon. During this approach, the object might enter a "gravitational keyhole" about 5 km (3.1 mi) wide, nudging it towards a far more dangerous encounter some 47 years later.

NASA's OSIRIS-REx mission, which collected a sample from Bennu's surface and returned it to Earth in September 2023, provided unprecedented data about its composition and structure. This helped scientists to refine models of the asteroid's future orbit, including the Yarkovsky effect – a small but significant force caused by sunlight altering its trajectory over time.

Calculations showed an approximately 1 in 2,700 (or 0.037%) chance of hitting Earth on 24th September 2182.* As the monitoring of Bennu continued in subsequent decades, these odds remained low, but they highlighted the importance of ongoing asteroid tracking and planetary defence strategies.

If Bennu were to collide with Earth, its mass of 78 billion kilograms and velocity of 28 kilometres per second (17.5 miles per second) would release energy equivalent to 1,200 megatons of TNT, causing widespread devastation. For comparison, the Tsar Bomba of 1961 – the largest nuclear device ever tested – had a blast yield of 50 megatons, meaning that Bennu would be 24 times more powerful, creating a 19 km (12 mi) fireball* and likely destroying everything within a 160 km (100 mi) radius, depending on the angle of impact.

By 2182, however, planetary defence strategies have advanced substantially. Over the nearly two centuries since Bennu's discovery, international collaborations on asteroid tracking, deflection methods, and emergency preparedness have all but eliminated the threat posed by this and other NEOs of similar size.

Credit: NASA's Scientific Visualization Studio

2190

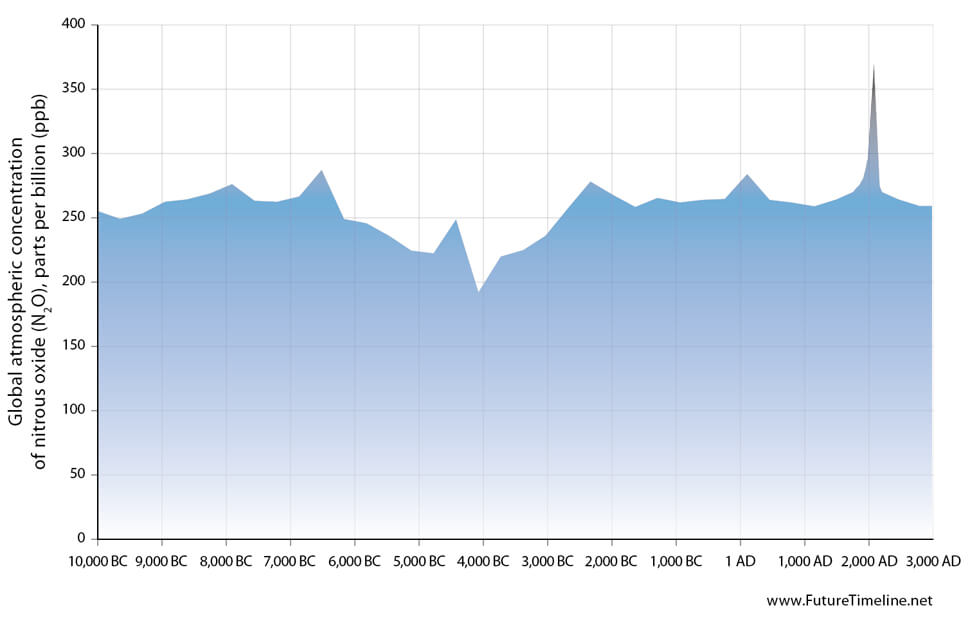

Nitrous oxide (N2O) has fallen to pre-industrial levels

Nitrous oxide (N2O) is a naturally occurring gas emitted by bacteria in the soils and oceans, forming part of the Earth's nitrogen cycle. It was first synthesised by English natural philosopher and chemist Joseph Priestley in 1772. From the Industrial Revolution onwards, human activities began to significantly increase the amount of N2O in the atmosphere. By the early 21st century, about 40% of total emissions were man-made.

By far the largest anthropogenic source (80%) was from agriculture and the use of synthetic fertilizers to improve soil, as well as the breakdown of nitrogen in livestock manure and urine. Industrial sources included production of chemicals such as nylon, internal combustion engines for transport and oxidizers in rocketry. Known as "laughing gas" due to its euphoric effects, it was also used in surgery and dentistry for anaesthetics and analgesics.

Nitrous oxide was found to be a powerful greenhouse gas – the third most important after carbon dioxide and methane. While not as abundant in the atmosphere as carbon dioxide (CO2), it had almost 300 times the heat-trapping ability per molecule and caused roughly 10 percent of global warming. After the banning of chlorofluorocarbons (CFCs) in the 1980s, it also became the most important substance in stratospheric ozone depletion.*

By the mid-21st century, the effects of global warming had become very serious.* While most efforts were focussed on mitigating CO2, attempts were made to address the imbalance of other greenhouse gases, including N20. There was no "silver bullet" for this. Instead, it would take a combination of substantial improvements in agricultural efficiency, reduced emissions in transportation and industrial sectors, along with changes in dietary habits towards less per capita meat consumption in the developed world. While many technologies and innovations were already available in earlier decades, these targets were unfortunately difficult to achieve – due to additional costs and the absence of political will for implementation. It was only during the catastrophic events in the second half of the century that sufficient efforts and financial resources were directed towards the problem.

With a lifespan of 114 years,* man-made N20 proved difficult to stabilise and remained in the atmosphere well into the 22nd century. By 2190, it has fallen to around 270 parts per billion (ppb), its pre-industrial level.** As well as halting the impact of global warming and ozone damage, other benefits include better overall air quality, reduced loss of biodiversity in eutrophied aquatic and terrestrial ecosystems, and economic benefits.

« 2149 |

⇡ Back to top ⇡ |

2200 » |

If you enjoy our content, please consider sharing it:

References

1 Pygmalion's Spectacles, Stanley Grauman Weinbaum:

http://www.gutenberg.org/files/22893/22893-h/22893-h.htm

Accessed 28th June 2014.

2 See 2015-2019.

3 Omni virtual reality interface is launched on Kickstarter, Future Timeline Blog:

https://www.futuretimeline.net/blog/2013/06/7-2.htm

Accessed 28th June 2014.

4 World's smallest single-chip system is <0.1 mm³, Future Timeline blog:

https://www.futuretimeline.net/blog/2021/05/14-worlds-smallest-chip-volume-mm3.htm

Accessed 23rd September 2021.

5 The Singularity Is Near: When Humans Transcend Biology, Ray Kurzweil:

http://www.amazon.com/The-Singularity-Is-Near-Transcend/dp/0143037889

Accessed 28th June 2014.

6 See 2076.

7 See 2140.

8 Constraints on the Universe as a Numerical Simulation, Silias R. Beane. et al., University of Washington:

http://arxiv.org/pdf/1210.1847v2.pdf

Accessed 28th June 2014.

9 Total Solar Eclipse of 2151 June 14, NASA:

http://eclipse.gsfc.nasa.gov/SEsearch/SEsearchmap.php?Ecl=21510614

Accessed 28th February 2010.

10 Aubrey de Grey – In Pursuit of Longevity, YouTube:

http://www.youtube.com/watch?v=HTMNfU7zftQ

Accessed 21st March 2010.

11 Launch costs to low Earth orbit, 1980-2100, Future Timeline:

https://www.futuretimeline.net/data-trends/6.htm

Accessed 7th June 2020.

12 Extraterrestrial Caves: The Future of Space Exploration, i-HLS:

https://i-hls.com/archives/90198

Accessed 7th June 2020.

13 "Muons, leptons produced in hadronic showers, can penetrate km-scale structures. The interiors of small bodies and surface structures could be mapped with high spatial resolution using a muontelescope (hodoscope) deployed in close proximity (in situ or from orbit)."

Deep Mapping of Small Solar System Bodies with Galactic Cosmic Ray Secondary Particle Showers, NASA:

https://www.nasa.gov/sites/default/files/files/Prettyman_NIAC_Symposium_2014.pdf

Accessed 7th June 2020.

14 New data about the Moon may help create lunar bases, NASA:

https://sservi.nasa.gov/articles/lunar-bases/

Accessed 7th June 2020.

15 Scientists Think They've Discovered Lava Tubes Leading to The Moon's Polar Ice, Science Alert:

https://www.sciencealert.com/scientists-discover-lava-tubes-leading-moon-polar-ice-water

Accessed 7th June 2020.

16 Huge lava tubes could house cities on Moon, Future Timeline:

https://www.futuretimeline.net/blog/2015/03/24.htm

Accessed 7th June 2020.

17 "Moontopia, a vision of what habitats on the Moon might look like in 150 years."

Moon habitat city concept shows how humans could live a comfortable life, Inverse:

https://www.inverse.com/article/60027-moon-habitat-city-concept-shows-how-humans-could-live-a-comfortable-life

Accessed 7th June 2020.

18 Moontopia, Hillarys:

https://www.hillarys.co.uk/static/moontopia/

Accessed 7th June 2020.

19 "From the observational point of view [...] the low neutrino backgrounds would make lunar viewing conditions significantly better than anything possible on the Earth in the energy range 1–1000 GeV."

A lunar neutrino detector, Source:

http://articles.adsabs.harvard.edu//full/1985lbsa.conf..335C/0000343.000.html

Accessed 7th June 2020.

20 Extrapolated from long-term trend in antimatter production capacity. See 2130.

21 Whipple shield, Wikipedia:

https://en.wikipedia.org/wiki/Whipple_shield

Accessed 23rd December 2025.

22 Strong evidence of a gas giant in Alpha Centauri system, Future Timeline Blog:

https://www.futuretimeline.net/blog/2025/08/20-gas-giant-alpha-centauri.htm

Accessed 23rd December 2025.

23 Four small exoplanets confirmed around Sun's neighbour, Future Timeline Blog:

https://futuretimeline.net/blog/2025/03/18-barnards-star-future-timeline.htm

Accessed 23rd December 2025.

24 Breakthrough in antimatter production, Future Timeline Blog:

https://www.futuretimeline.net/blog/2025/11/24-breakthrough-in-antimatter-production.htm

Accessed 23rd December 2025.

25 101955 Bennu, Wikipedia:

https://en.wikipedia.org/wiki/101955_Bennu

Accessed 10th October 2024.

26 Asteroid Launcher, Neal.Fun:

https://neal.fun/asteroid-launcher/

Accessed 10th October 2024.

27 N2O: Not One of the Usual Suspects, NOAA:

http://www.esrl.noaa.gov/news/quarterly/fall2009/nitrous_oxide_top_ozone_depleting_emission.html

Accessed 27th May 2014.

28 See 2060-2100.

29 Greenhouse Gas – Global Warming Potential, Wikipedia:

https://en.wikipedia.org/wiki/Greenhouse_gas#Global_warming_potential

Accessed 27th May 2014.

30 Representative concentration pathways and mitigation scenarios for nitrous oxide, Eric A Davidson:

http://iopscience.iop.org/1748-9326/7/2/024005/article

Accessed 27th May 2014.

31 We have used a mid-range scenario for this graph – neither overly optimistic, nor overly pessimistic.