Supercomputing News and Discussions

Re: Supercomputing News and Discussions

#500 in June 2016 used 4,930 6-core Xeon E5-2640 2.5GHz and #500 in June 2022 uses 2,880 20-core Xeon E5-2673v4 2.3GHz (both in the USA and no GPUs). Linpack fp64 performance increased from 286 teraflops to 1.65 petaflops. It would be possible to get to 16.5 petaflops by using newer (40 to 64-core) Xeons and GPUs. But do they want GPUs? Not everything can run on GPUs. Anyhow, getting from 1.65 to 16.5 petaflops in #500 doesn't look that challenging. The real challenge will be to get from 16.5 to 165 petaflops in #500. There are no known ways to do that within the budget. I imagine that with time, more and more software will run on GPUs, but they also tend to use more and more energy and require better and better cooling.

As an example:

After nearly 6 years I bought 2.5x more expensive graphics card for my system, that is 2.5x faster than the previous one and has 50% more memory. Progress is not good enough for radically improving performance or memory in reasonable time-frames. Unless you call waiting 8 years for 4x reasonable.

As an example:

After nearly 6 years I bought 2.5x more expensive graphics card for my system, that is 2.5x faster than the previous one and has 50% more memory. Progress is not good enough for radically improving performance or memory in reasonable time-frames. Unless you call waiting 8 years for 4x reasonable.

Global economy doubles roughly every 20 years. Livestock-as-food will globally stop being a major thing by the year 2050. Computers need a new paradigm to continue exponential improvement of information technology. Current paradigm will bring only around 4x above 2024 hardware and that is very limiting.

Re: Supercomputing News and Discussions

20 exaFLOP supercomputer proposed for 2025

18th August 2022

The U.S. Department of Energy (DOE) has published a request for information from computer hardware and software vendors to assist in the planning, design, and commission of next-generation supercomputing systems.

The DOE request calls for computing systems in the 2025–2030 timeframe that are five to 10 times faster than those currently available and/or able to perform more complex applications in "data science, artificial intelligence, edge deployments at facilities, and science ecosystem problems, in addition to traditional modelling and simulation applications."

U.S. and Slovakia-based company Tachyum has now responded with its proposal for a 20 exaFLOP system. This would be based on Prodigy, its flagship product and described as the world's first "universal" processor. According to Tachyum, the chip integrates 128 64-bit compute cores running at 5.7 GHz and combining the functionality of a CPU, GPU, and TPU into a single device with homogeneous architecture. This allows Prodigy to deliver performance at up to 4x that of the highest performing x86 processors (for cloud workloads) and 3x that of the highest performing GPU for HPC and 6x for AI applications.

Read more: https://www.futuretimeline.net/blog/202 ... s-2025.htm

18th August 2022

The U.S. Department of Energy (DOE) has published a request for information from computer hardware and software vendors to assist in the planning, design, and commission of next-generation supercomputing systems.

The DOE request calls for computing systems in the 2025–2030 timeframe that are five to 10 times faster than those currently available and/or able to perform more complex applications in "data science, artificial intelligence, edge deployments at facilities, and science ecosystem problems, in addition to traditional modelling and simulation applications."

U.S. and Slovakia-based company Tachyum has now responded with its proposal for a 20 exaFLOP system. This would be based on Prodigy, its flagship product and described as the world's first "universal" processor. According to Tachyum, the chip integrates 128 64-bit compute cores running at 5.7 GHz and combining the functionality of a CPU, GPU, and TPU into a single device with homogeneous architecture. This allows Prodigy to deliver performance at up to 4x that of the highest performing x86 processors (for cloud workloads) and 3x that of the highest performing GPU for HPC and 6x for AI applications.

Read more: https://www.futuretimeline.net/blog/202 ... s-2025.htm

supercomputing performance trend 1993-2023

supercomputing performance trend 1993-2023

Here's a good, clear trendline graph of the aggregate performance in FLOPS of the Top 500 supercomputers listed in the TOP500 list, made by Michael P. Frank (the twitt). It shows how the acceleration of the sum of all 500 supercomputers performance decreased from +85% a year to +40% a year, back in 2013. This means 28x (all 500 combined) in 10 years, instead of 460x in 10 years. That's a substantial change (~16.4x less) and should be taken into account (when making predictions for example). The positive side is that it's still going up, just not as well as it used to.

Global economy doubles roughly every 20 years. Livestock-as-food will globally stop being a major thing by the year 2050. Computers need a new paradigm to continue exponential improvement of information technology. Current paradigm will bring only around 4x above 2024 hardware and that is very limiting.

Re: Supercomputing News and Discussions

UK to invest £900m in supercomputer in bid to build own ‘BritGPT’

Wed 15 Mar 2023 18.00 GMT

The UK government is to invest £900m in a cutting-edge supercomputer as part of an artificial intelligence strategy that includes ensuring the country can build its own “BritGPT”.

The treasury outlined plans to spend around £900m on building an exascale computer, which would be several times more powerful than the UK’s biggest computers, and establishing a new AI research body.

An exascale computer can be used for training complex AI models, but also have other uses across science, industry and defence, including modelling weather forecasts and climate projections.

The Treasury said the £900m investment will “allow researchers to better understand climate change, power the discovery of new drugs and maximise our potential in AI.”.

An exascale computer is one that can carry out more than one billion billion simple calculations a second, a metric known as an “exaflops”. Only one such machine is known to exist, Frontier, which is housed at America’s Oak Ridge National Laboratory and used for scientific research – although supercomputers have such important military applications that it may be the case that others already exist but are not acknowledged by their owners. Frontier, which cost about £500m to produce and came online in 2022, is more than twice as powerful as the next fastest machine.

https://www.theguardian.com/technology/ ... wn-britgpt

Wed 15 Mar 2023 18.00 GMT

The UK government is to invest £900m in a cutting-edge supercomputer as part of an artificial intelligence strategy that includes ensuring the country can build its own “BritGPT”.

The treasury outlined plans to spend around £900m on building an exascale computer, which would be several times more powerful than the UK’s biggest computers, and establishing a new AI research body.

An exascale computer can be used for training complex AI models, but also have other uses across science, industry and defence, including modelling weather forecasts and climate projections.

The Treasury said the £900m investment will “allow researchers to better understand climate change, power the discovery of new drugs and maximise our potential in AI.”.

An exascale computer is one that can carry out more than one billion billion simple calculations a second, a metric known as an “exaflops”. Only one such machine is known to exist, Frontier, which is housed at America’s Oak Ridge National Laboratory and used for scientific research – although supercomputers have such important military applications that it may be the case that others already exist but are not acknowledged by their owners. Frontier, which cost about £500m to produce and came online in 2022, is more than twice as powerful as the next fastest machine.

https://www.theguardian.com/technology/ ... wn-britgpt

new TOP 500 supercomputers 06/2023 list

new TOP 500 supercomputers 06/2023 list

There is a new TOP500 list of the top 500 supercomputers in the world. It doesn't really include all supercomputers, but a significant number of them.

High Performance Linpack (HPL) exaflops have risen by 18.2% since the first 2022 TOP500 list and by 6.1% since the second 2022 list (so not by much). From 3 exaflops in 11/2021, to 4.4 in 06/2022 (thanks to the introduction of Frontier at OLCF - Oak Ridge National Laboratory), to 4.9 in 11/2022 and to 5.2 in 06/2023.

The most efficient supercomputer on the list is the Henri system at the Flatiron Institute in New York City, with an energy efficiency of 65.40 Gflops/Watt.

Japanese Fugaku system once again achieved the top position on the HPCG ranking, by holding to its previous score of 16.0 HPCG-Pflop/s. USA's Frontier claimed the No. 2 spot with 14.05 HPCG-Pflop/s, No. 3 was captured by Finnish LUMI with a score of 3.41 HPCG-Pflop/s and No.4 is taken by Italian Leonardo supercomputer with 3.11 HPCG-Pflop/s.

https://top500.org/news/frontier-remain ... hpl-score/

High Performance Linpack (HPL) exaflops have risen by 18.2% since the first 2022 TOP500 list and by 6.1% since the second 2022 list (so not by much). From 3 exaflops in 11/2021, to 4.4 in 06/2022 (thanks to the introduction of Frontier at OLCF - Oak Ridge National Laboratory), to 4.9 in 11/2022 and to 5.2 in 06/2023.

The most efficient supercomputer on the list is the Henri system at the Flatiron Institute in New York City, with an energy efficiency of 65.40 Gflops/Watt.

Japanese Fugaku system once again achieved the top position on the HPCG ranking, by holding to its previous score of 16.0 HPCG-Pflop/s. USA's Frontier claimed the No. 2 spot with 14.05 HPCG-Pflop/s, No. 3 was captured by Finnish LUMI with a score of 3.41 HPCG-Pflop/s and No.4 is taken by Italian Leonardo supercomputer with 3.11 HPCG-Pflop/s.

https://top500.org/news/frontier-remain ... hpl-score/

Global economy doubles roughly every 20 years. Livestock-as-food will globally stop being a major thing by the year 2050. Computers need a new paradigm to continue exponential improvement of information technology. Current paradigm will bring only around 4x above 2024 hardware and that is very limiting.

Re: Supercomputing News and Discussions

2 ExaFLOPS Aurora Supercomputer Is Ready

It'll be coming online later this yearArgonne National Laboratory and Intel announced on Thursday that installation of 10,624 blades for the Aurora supercomputer has been completed and the system will come online later in 2023. The machine uses tens of thousands of Xeon Max 'Sapphire Rapids' processors with HBM2E memory as well as tens of thousands Data Center GPU Max 'Ponte Vecchio' compute GPUs to achieve performance of over 2 FP64 ExaFLOPS.

The HPE-built Aurora supercomputer consists of 166 racks with 64 blades per rack, for a total of 10,624 blades. Each Aurora blade is based on two Xeon Max CPUs with 64 GB on-package HBM2E memory as well as six Intel Data Center Max 'Ponte Vecchio' compute GPUs. These CPUs and GPUs will be cooled with a custom liquid-cooling system.

And remember my friend, future events such as these will affect you in the future

Re: Supercomputing News and Discussions

Took us a long time to reach one exaflop. Now look at us leaping over to two exaflops like it's nothing.Yuli Ban wrote: ↑Tue Jun 27, 2023 4:44 pm 2 ExaFLOPS Aurora Supercomputer Is ReadyIt'll be coming online later this yearArgonne National Laboratory and Intel announced on Thursday that installation of 10,624 blades for the Aurora supercomputer has been completed and the system will come online later in 2023. The machine uses tens of thousands of Xeon Max 'Sapphire Rapids' processors with HBM2E memory as well as tens of thousands Data Center GPU Max 'Ponte Vecchio' compute GPUs to achieve performance of over 2 FP64 ExaFLOPS.

The HPE-built Aurora supercomputer consists of 166 racks with 64 blades per rack, for a total of 10,624 blades. Each Aurora blade is based on two Xeon Max CPUs with 64 GB on-package HBM2E memory as well as six Intel Data Center Max 'Ponte Vecchio' compute GPUs. These CPUs and GPUs will be cooled with a custom liquid-cooling system.

To know is essentially the same as not knowing. The only thing that occurs is the rearrangement of atoms in your brain.

Re: Supercomputing News and Discussions

We will probably see 10+ exaFLOPS by 2026-27.

Re: Supercomputing News and Discussions

I hope so, but I have my doubts. Especially about practical useful scientific high precision performance. It's not impossible, but also not certain.

Global economy doubles roughly every 20 years. Livestock-as-food will globally stop being a major thing by the year 2050. Computers need a new paradigm to continue exponential improvement of information technology. Current paradigm will bring only around 4x above 2024 hardware and that is very limiting.

Re: Supercomputing News and Discussions

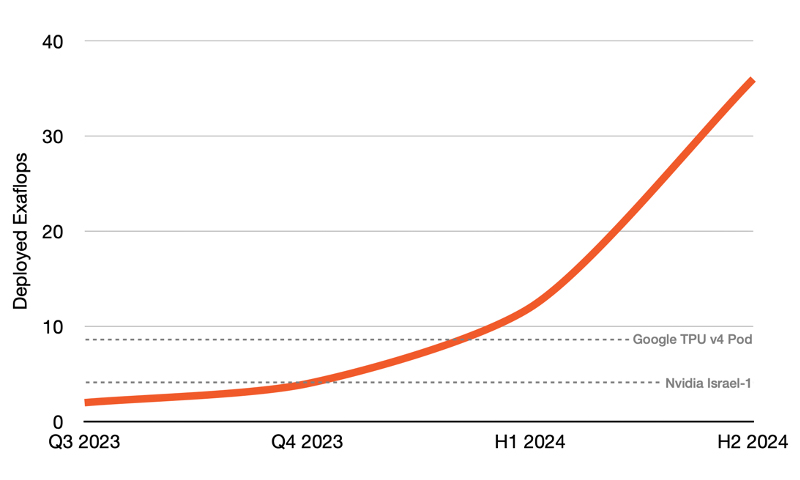

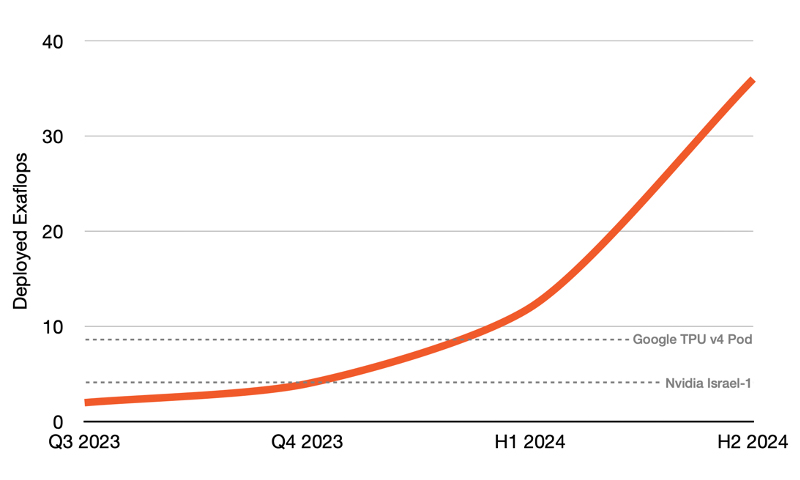

World's largest supercomputer for training AI revealed

25th July 2023

Cerebras Systems, in collaboration with G42, has revealed Condor Galaxy – a network of nine interconnected supercomputers, which together will provide up to 36 exaFLOPs for training AI.

https://www.futuretimeline.net/blog/202 ... aflops.htm

25th July 2023

Cerebras Systems, in collaboration with G42, has revealed Condor Galaxy – a network of nine interconnected supercomputers, which together will provide up to 36 exaFLOPs for training AI.

https://www.futuretimeline.net/blog/202 ... aflops.htm

-

weatheriscool

- Posts: 16471

- Joined: Sun May 16, 2021 6:16 pm

Re: Supercomputing News and Discussions

This is what you get when you elect an party that hates science and wants to defund everything again and again. Your nation turns to shit!

Re: Supercomputing News and Discussions

Tachyum to build 50 exaFLOP supercomputer

October 3, 2023

Tachyum is to build a large scale supercomputer based on its 5nm Prodigy Universal Processor chip for a US customer.

The Tachyum supercomputer will have over 50 exaFLOP performance, 25 times faster than today’s systems and support AI models potentially 25,000 times larger with access to hundreds of petabytes of DRAM and exabytes of flash-based primary storage.

The Prodigy chip enables a significant increase in the memory, storage and compute architectures for datacentre, AI and HPC workloads in government, research and academia, business, manufacturing and other industries.

Earlier this year the Slovak/US company detailed a 20 exaflop supercomputer architecture using the Prodigy chip which is expected to sample next year.

Installation of the Prodigy-enabled supercomputer will begin in 2024 and reach full capacity in 2025. This will provide 8 Zettaflops of AI training for big language models and 16 Zettaflops of image and video processing. This would provide the ability to fit more than 100,000x PALM2 530B parameter models or 25,000x ChatGPT4 1.7T parameter models with base memory and 100,000x ChatGPT4 with 4x of base DRAM.

https://www.eenewseurope.com/en/tachyum ... rcomputer/

October 3, 2023

Tachyum is to build a large scale supercomputer based on its 5nm Prodigy Universal Processor chip for a US customer.

The Tachyum supercomputer will have over 50 exaFLOP performance, 25 times faster than today’s systems and support AI models potentially 25,000 times larger with access to hundreds of petabytes of DRAM and exabytes of flash-based primary storage.

The Prodigy chip enables a significant increase in the memory, storage and compute architectures for datacentre, AI and HPC workloads in government, research and academia, business, manufacturing and other industries.

Earlier this year the Slovak/US company detailed a 20 exaflop supercomputer architecture using the Prodigy chip which is expected to sample next year.

Installation of the Prodigy-enabled supercomputer will begin in 2024 and reach full capacity in 2025. This will provide 8 Zettaflops of AI training for big language models and 16 Zettaflops of image and video processing. This would provide the ability to fit more than 100,000x PALM2 530B parameter models or 25,000x ChatGPT4 1.7T parameter models with base memory and 100,000x ChatGPT4 with 4x of base DRAM.

https://www.eenewseurope.com/en/tachyum ... rcomputer/

Re: Supercomputing News and Discussions

This is nuts, but after looking at projected performance charts, this seem to be in line with the predictions for 2025. Still, what a time to be alive.

To know is essentially the same as not knowing. The only thing that occurs is the rearrangement of atoms in your brain.

Re: Supercomputing News and Discussions

Russia Plans to Use Banned Nvidia H100 GPUs to Build Top 10 Supercomputers

By Anton Shilov

published about 3 hours ago

Russia has an ambitious plan to build up to ten supercomputers by 2030, each potentially housing 10,000 to 15,000 Nvidia H100 GPUs. From a computing perspective, this would provide the nation with performance on a scale similar to that which was used to train Chat GPT. Formidable in general, a system featuring so many H100 GPUs could produce some 450 FP64 TFLOPS, which is half of an ExaFLOP, a level of supercomputer performance that has only been achieved by the U.S., so far.

Spearheaded by the 'Trusted Infrastructure' team, the Russian project promises to push the boundaries of computational capabilities, with each machine potentially boasting between 10,000 to 15,000 Nvidia H100 GPUs. However, the desired compute AI and HPC GPUs would come from Nvidia, an American company.

The war Russia started in Ukraine had led the U.S. to restrict tech exports to Russia. creating a gaping hole in the procurement strategy for processors like Nvidia GPUs. Thus, the question that hangs in the air is: how could Russia bypass these restrictions since it would seem impossible to smuggle thousands of valuable AI and HPC GPUs?

The financial landscape also presents a set of challenges for the Russians. With today's hardware prices, the project's budget would be about $6 billion, making it a colossal investment. However, rapid advancements in technology have the potential to reduce costs in the coming years. Perhaps by 2030, these systems could cost $500 - $700 million.

Russia's most powerful supercomputer is Chervonenkis, it is owned by Yandex and is equipped with 1,592 nodes featuring Nvidia A100 GPUs. It ranks 27th in the world for computational power, with a performance of 21.53 PetaFLOPS. Three of the seven Russian supercomputers belong to Yandex (Lyapunov, Chervonenkis, and Galushkin), two to Sberbank (Christofari and Chrisofari Neo), and one each to MSU (Lomonosov) and MTS (GROM). All of them operate on Nvidia GPUs from previous generations.

https://www.tomshardware.com/news/russi ... rcomputers

By Anton Shilov

published about 3 hours ago

Russia has an ambitious plan to build up to ten supercomputers by 2030, each potentially housing 10,000 to 15,000 Nvidia H100 GPUs. From a computing perspective, this would provide the nation with performance on a scale similar to that which was used to train Chat GPT. Formidable in general, a system featuring so many H100 GPUs could produce some 450 FP64 TFLOPS, which is half of an ExaFLOP, a level of supercomputer performance that has only been achieved by the U.S., so far.

Spearheaded by the 'Trusted Infrastructure' team, the Russian project promises to push the boundaries of computational capabilities, with each machine potentially boasting between 10,000 to 15,000 Nvidia H100 GPUs. However, the desired compute AI and HPC GPUs would come from Nvidia, an American company.

The war Russia started in Ukraine had led the U.S. to restrict tech exports to Russia. creating a gaping hole in the procurement strategy for processors like Nvidia GPUs. Thus, the question that hangs in the air is: how could Russia bypass these restrictions since it would seem impossible to smuggle thousands of valuable AI and HPC GPUs?

The financial landscape also presents a set of challenges for the Russians. With today's hardware prices, the project's budget would be about $6 billion, making it a colossal investment. However, rapid advancements in technology have the potential to reduce costs in the coming years. Perhaps by 2030, these systems could cost $500 - $700 million.

Russia's most powerful supercomputer is Chervonenkis, it is owned by Yandex and is equipped with 1,592 nodes featuring Nvidia A100 GPUs. It ranks 27th in the world for computational power, with a performance of 21.53 PetaFLOPS. Three of the seven Russian supercomputers belong to Yandex (Lyapunov, Chervonenkis, and Galushkin), two to Sberbank (Christofari and Chrisofari Neo), and one each to MSU (Lomonosov) and MTS (GROM). All of them operate on Nvidia GPUs from previous generations.

https://www.tomshardware.com/news/russi ... rcomputers

-

firestar464

- Posts: 1955

- Joined: Wed Oct 12, 2022 7:45 am

-

weatheriscool

- Posts: 16471

- Joined: Sun May 16, 2021 6:16 pm

Re: Supercomputing News and Discussions

China Plans to Hit 300 Exaflops of Compute Power by 2025

The country is ramping up its AI arms race against the West.

By Josh Norem October 9, 2023

https://www.extremetech.com/computing/c ... er-by-2025

The country is ramping up its AI arms race against the West.

By Josh Norem October 9, 2023

https://www.extremetech.com/computing/c ... er-by-2025

A new kind of conflict is brewing between China and the West, and it involves semiconductors instead of weapons and territory. The country's ministries have announced plans to expand its computing power significantly in the coming years, intending to hit 300 exaflops by 2025. This would require it almost to double its supercomputing power in the next two years, which is a goal the US would certainly like to prevent or at least slow down if possible.

The move by China is aimed at helping it maintain parity with the supercomputing power of the US. This long-running conflict has been given new life thanks to the recent push to build systems for training large language models (LLM) for AI applications. According to CNBC, six government ministries announced China's 300 exaflop goal, making it clear the country isn't backing down in its quest for computing supremacy despite broad US sanctions aimed at preventing it from acquiring advanced computing hardware. The country's ministries said its desire to obtain this level of computing power is fundamental to its goals in education and finance, which apparently isn't sarcasm.

Re: Supercomputing News and Discussions

UK’s first exascale supercomputer project goes to Edinburgh

October 9, 2023 - 11:24 am

The UK today said it had selected Edinburgh to host its first exascale next-gen supercomputer, which will be 50 times faster than its current highest capacity system.

The University of Edinburgh will house the country’s new exascale computing facility, which the government says will “safely harness its potential to improve lives across the country.” It will build on the technology and experience from the planned Bristol supercomputer — the AI Research Resource (AIRR), or Isambard-AI.

“If we want the UK to remain a global leader in scientific discovery and technological innovation, we need to power up the systems that make those breakthroughs possible, said Michelle Donelan, Secretary of State for Science, Innovation, and Technology.

“This new UK government funded exascale computer in Edinburgh will provide British researchers with an ultra-fast, versatile resource to support pioneering work into AI safety, life-saving drugs, and clean low-carbon energy,” she continued.

Today’s announcement comes hot on the heels of last week’s declaration that the EU will build its first exascale computer in Germany.

https://thenextweb.com/news/uk-first-ex ... -edinburgh

October 9, 2023 - 11:24 am

The UK today said it had selected Edinburgh to host its first exascale next-gen supercomputer, which will be 50 times faster than its current highest capacity system.

The University of Edinburgh will house the country’s new exascale computing facility, which the government says will “safely harness its potential to improve lives across the country.” It will build on the technology and experience from the planned Bristol supercomputer — the AI Research Resource (AIRR), or Isambard-AI.

“If we want the UK to remain a global leader in scientific discovery and technological innovation, we need to power up the systems that make those breakthroughs possible, said Michelle Donelan, Secretary of State for Science, Innovation, and Technology.

“This new UK government funded exascale computer in Edinburgh will provide British researchers with an ultra-fast, versatile resource to support pioneering work into AI safety, life-saving drugs, and clean low-carbon energy,” she continued.

Today’s announcement comes hot on the heels of last week’s declaration that the EU will build its first exascale computer in Germany.

https://thenextweb.com/news/uk-first-ex ... -edinburgh