30th September 2024 AI hits 100% accuracy with CAPTCHA, beating humans A new study has shown that AI bots can now solve Google's reCAPTCHAv2 with 100% accuracy, surpassing even humans in this task. This raises questions about the future of online security.

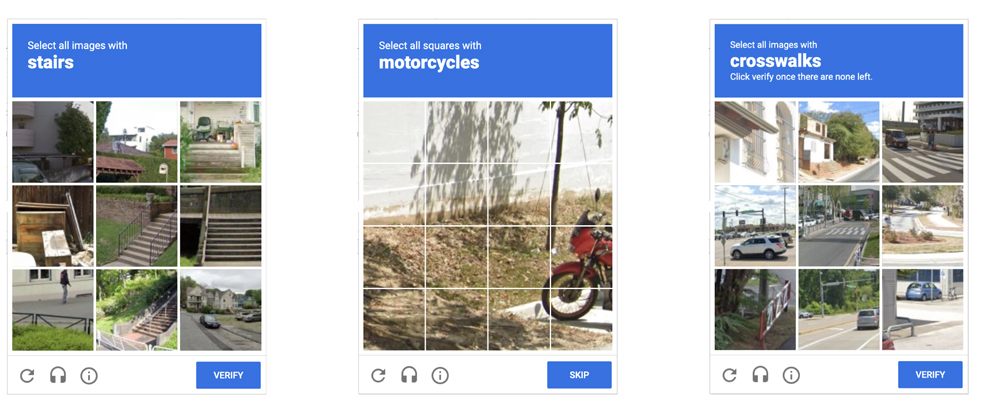

For over a decade, CAPTCHAs have been the standard for determining whether you're a human or a bot when browsing online. These tests – ranging from identifying traffic lights in an image grid to simply checking a box that says "I'm not a robot" – are meant to ensure that only real people can access certain online services. But a new study from researchers at ETH Zurich has shown that machines are now better than humans at solving these challenges, raising concerns about the future of online security. Google's reCAPTCHAv2, one of the most widely used CAPTCHA systems, is meant to distinguish between humans and bots by presenting a series of image-recognition challenges. Users are asked to click on images that contain objects like stairs, buses, or crosswalks. The idea is that while these tasks are simple for humans, they should be much harder for bots. However, this new research finds that the latest generation of AI can solve these CAPTCHAs with 100% accuracy, compared to the 68–71% success rate reported by previous studies. The breakthrough comes from employing advanced machine learning models, specifically the YOLOv8 (You Only Look Once) object detection system. This model, trained on large datasets of labelled images, can accurately and rapidly identify objects in CAPTCHA challenges. The researchers fine-tuned this model to achieve unprecedented results, solving every CAPTCHA it encountered with zero errors. Even more surprisingly, the bots didn't just match human performance – they outperformed humans in certain cases. The study found that there was little difference in the number of challenges bots and humans needed to solve, but the bots handled the tasks more consistently.

The researchers discovered that reCAPTCHAv2 relies heavily on tracking users' browser data to determine whether they are human. Users with established browsing histories and cookies are more likely to be viewed by the system as trusted, and often bypass CAPTCHAs altogether. Mouse movements and the use of virtual private networks (VPNs) also play crucial roles. When the bots in this study used VPNs and simulated mouse movements to mimic natural human actions, they became indistinguishable from real users. This poses a significant challenge for online security. Google's reCAPTCHAv2 was designed to be a robust defence against bots, but these findings suggest that it is no longer effective in the age of advanced AI. The implications for this are huge – bots could be used to bypass security on countless websites, perform many automated tasks that are typically restricted to humans, and carry out malicious activities like spamming or account takeovers. The study also sheds light on the philosophical debate around this subject. It describes CAPTCHA as the "exact boundary between the most intelligent machine and the least intelligent human." However, this boundary is rapidly blurring as AI continues to adapt. CAPTCHA systems were once an effective way to block automated bots, but the whole future of this technology is now in question. The ETH Zurich study also explains that Google's next-generation CAPTCHA system, reCAPTCHAv3, has already moved away from asking users to solve puzzles. Instead, it relies on monitoring of a user's past behaviour and assigns a risk score based on their activity. This solution comes with its own problems, however. Some legitimate users may lack enough convincing browsing data and could be locked out of vital online services. The result is a balancing act between security and accessibility that CAPTCHA systems must navigate. This research is a wake-up call for those relying on CAPTCHA as a security measure. As bots become more capable, we may need to develop entirely new systems for verifying user authenticity – systems that can evolve just as quickly as AI.

Comments »

If you enjoyed this article, please consider sharing it:

|

||||||