17th February 2024 OpenAI launches text-to-video generator The Internet is abuzz with talk about Sora, a new AI model that brings text-to-video generation to a whole new level.

Not even a year ago, a bizarre and nightmarish depiction of Will Smith eating spaghetti went viral. That video – made with Hugging Face's ModelScope – highlighted the nascent state of text-to-video generation. Other, more competent AI models later emerged. Overall, however, they have tended to lag behind the large language models (LLMs) like ChatGPT, being more of a novelty than a genuinely useful and serious tool. The size and complexity of algorithms required for AI-generated video, as opposed to merely text or images, has limited the practicality, and made the idea of an efficient and reliable text-to-video platform seem like more of a pipe dream than an imminent reality. That changed this week, however, as OpenAI revealed its latest creation: Sora. Founded in December 2015, the San Francisco-based company, headed by CEO Sam Altman, has already wowed the world with ChatGPT and the DALL·E series of image generators. With Sora, OpenAI has now moved into video.

Limitless creative potential

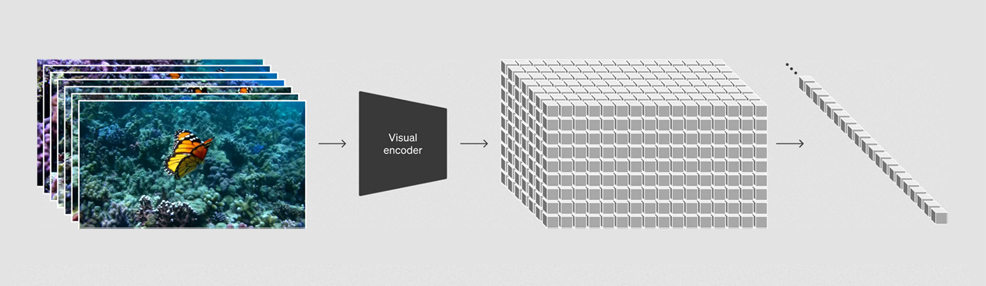

Sora is a text-to-video model, able to generate full HD-quality clips of up to 60 seconds, based on a user's descriptive prompts. Its name is based on the Japanese word for sky, which is meant to signify its "limitless creative potential". OpenAI trained the model using publicly-available videos, as well as copyrighted videos licensed for the purpose – each of variable durations, resolutions, and aspect ratios – but has yet to reveal the number or exact source(s) of these videos. Sora works by breaking down visual data into "patches", which are the video equivalent of the "tokens" used by text-based models like ChatGPT to predict the next word in a sequence. Patches are "a highly-scalable and effective representation for training generative models on diverse types of videos and images," according to OpenAI. If placed in a sequence, patches can maintain the correct aspect ratio and resolution.

Sora uses the "recaptioning" technique from DALL·E 3, which involves generating highly descriptive captions for the visual training data. After selecting and then compressing videos into a manageable format, key features called "spacetime patches" are extracted from the data, which serve as building blocks for producing dynamic effects. Not only is a new video generated from scratch, but the detail and flexibility provided by spacetime patches can allow sophisticated features like 3D consistency and simulated physics. For example, people and objects in a scene can move convincingly even when a camera's view is rotated, while foreground and background visuals can work together seamlessly. Put simply, with each of a sequence's new frames, the model learns to build a more accurate representation of the world – things like how a leaf falls, or the behaviour of a puppy on a leash, adhering to physical rules such as wind and gravity. It does this without even being programmed beforehand to know the underlying physics, but through the sheer volume of visual data, according to OpenAI.

Emergent capabilities

"Our results suggest that scaling video generation models is a promising path towards building general purpose simulators of the physical world," the company explains in a technical report. "We find that video models exhibit a number of interesting emergent capabilities when trained at scale. These capabilities enable Sora to simulate some aspects of people, animals and environments from the physical world. These properties emerge without any explicit inductive biases for 3D, objects, etc.—they are purely phenomena of scale." There are imperfections, however. OpenAI notes that Sora is unable to accurately model some complex effects, like glass shattering. It can also produce inconsistencies in an object's state and trigger the spontaneous appearance of new objects, especially in long-duration clips. Overall, though, its capabilities are clearly a major leap over previous efforts like Gen-2 by Runway, passing the uncanny valley in some of the examples published this week. OpenAI's new technique is also more efficient than earlier models, lowering the amount of computation required. The full model has yet to be publicly released, as testing is still underway. Limited access has been provided to a small "red team" that includes a number of experts in misinformation and bias, as well as creative professionals, to seek feedback on its usefulness. If and when it does become widely available, Sora looks set to be an amazing tool for content creators – transforming areas like film production, animation, marketing, education, training, and simulation. Future versions, expanded from the current 60 seconds to longer formats, could democratise video creation and enhance storytelling by drastically reducing the budgets needed for professional-looking work. But such a disruptive technology will inevitably raise concerns. While empowering many people, others could find themselves out of a job, including those who contribute footage to stock video websites. Why pay for a drone operator to obtain aerial shots of the California coastline, for example, when a simple AI prompt will do the same task in a matter of seconds, at little or no cost? In a particularly busy election year, the technology's potential for harm becomes even more concerning, as its capabilities could be misused to create convincing yet entirely fabricated content, swaying public opinion and undermining the electoral process. Author and journalist Steven Levy, writing in Wired, concludes that OpenAI has a "huge task" to prevent Sora becoming a "misinformation train wreck." Such implications highlight the growing urgency of moral, ethical, and safety issues surrounding AI. Indeed, the overwhelming sentiment being expressed on social media this weekend is the sheer pace of progress in the models. If their quality can improve by this much in less than a year, where might they be in another year? Two years? Five?

Comments »

If you enjoyed this article, please consider sharing it:

|

||||||