20th March 2023 A busy week in AI Last week saw a plethora of new advances in AI, including the much-anticipated release of GPT-4.

Rarely, if ever, has more progress been witnessed in AI than what we are currently seeing. This is clearly an exceptional time for the field, with much hype and speculation around what the near future may bring. Following the recent launch of Google's PaLM-E, alongside numerous other developments, some futurists believe that so-called artificial general intelligence (AGI) – or, at least, proto-AGI – could be imminent. Such a milestone would be a clear and profound signal that machines are now rapidly catching up with humans in both cognitive and physical abilities. Whether or not these claims are overblown remains to be seen. However, even the most ardent of sceptics must surely concede that the recent flurry of technological breakthroughs has exceeded previous expectations. Now, another week has gone by – and what a week it has been. In this blog, we will look at just some of the announcements made over the last seven days by major companies and research teams in AI.

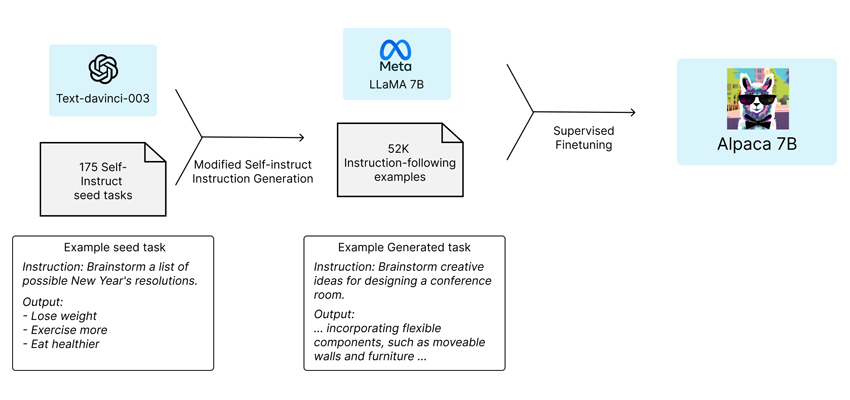

Alpaca 7B On Monday, a team from Stanford University, California, launched a new language model called Alpaca 7B. This offers similar performance to OpenAI's ChatGPT, but at much lower cost and computing requirements. As explained in their press release, the academic community would like to study and do experiments on large models like GPT-3 and ChatGPT – but this has proven to be difficult since "there is no easily accessible model that comes close in capabilities to closed-source models such as OpenAI's text-davinci-003." They have therefore created their own (similar) version. The researchers took 175 human-written seed tasks, which "primed" text-davinci-003 – the system used for running GPT-3 and ChatGPT – into generating a much larger dataset of 52,000. They then used this data to fine-tune a 7 billion-parameter variant of Meta's recently announced LLaMA model. The initial training run took three hours on eight NVIDIA A100 graphics processing units (GPUs), each with 80GB of memory, which costs less than $100 on most cloud compute providers. In a blind comparison test between Alpaca 7B and OpenAI's text-davinci-003, the researchers found that "these two models have very similar performance: Alpaca wins 90 versus 89 comparisons against text-davinci-003." In other words, the Stanford team built a model that is relatively tiny, yet demonstrates similar capability to the GPT-3 models. Furthermore, LLaMA is available with up to 65 billion parameters, so the researchers could build a more powerful version of Alpaca, which might outperform the likes of GPT-4 in the near future. However, they emphasise that their program is only for academic research and any commercial use is prohibited, for now.

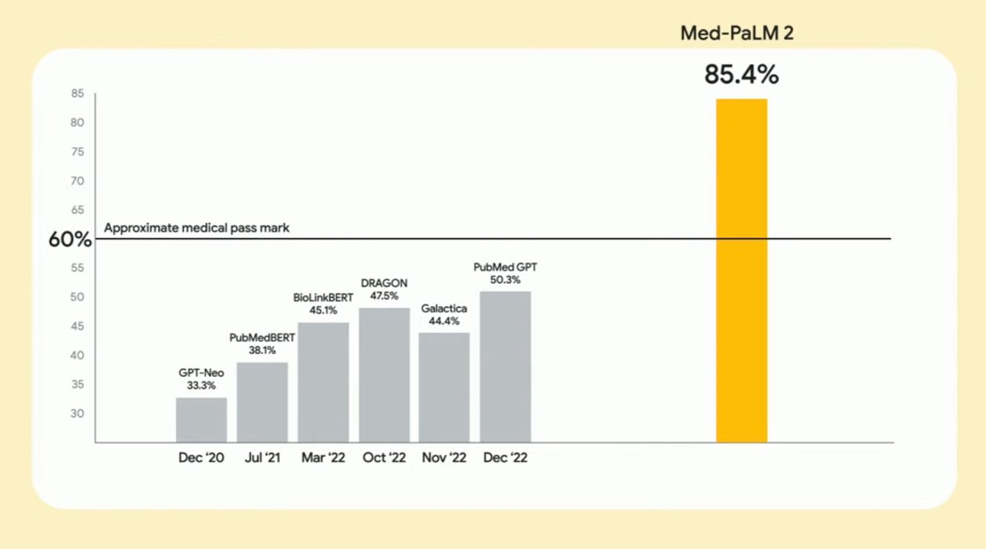

Med-PaLM 2 On Tuesday, Google released Med-PaLM 2, a large language model (LLM) for medical text. This can function like a virtual doctor, by answering questions on a vast range of health-related subjects, providing detailed responses. Google announced the original PaLM (Pathways Language Model) in April 2022. With a massive 540 billion parameters (triple the size of GPT-3), it could perform a wide variety of tasks, such as common-sense reasoning, mathematics, joke explanation, code generation, and translation. Google and DeepMind also developed a version called Med-PaLM, which they fine-tuned on medical data. Outperforming previous models, it became the first AI to obtain a passing score on U.S. medical licensing questions. In addition to answering both multiple choice and open-ended questions accurately, it also provided reasoning and the ability to evaluate its own responses. While Med-PaLM achieved a medical pass mark of 67.2%, its successor is even more capable. Google reported that Med-PaLM 2 consistently performs at an "expert" level on medical exam questions, with a score of 85.4%, an improvement of more than 18%. In a presentation, the company provided examples of these, such as "What are the first warning signs of pneumonia?" and "Can incontinence be cured?" – which Med-PaLM 2 answered correctly and, in some cases, resulted in more detailed responses than those of human clinicians. However, given the sensitive nature of medical information, Google cautions that more work is needed before medical chatbots based on this model can enter mainstream use. "The potential here is tremendous," said Dr. Alan Karthikesalingam, senior researcher at Google Health. "But it's crucial that real-world applications are explored in a responsible and ethical manner."

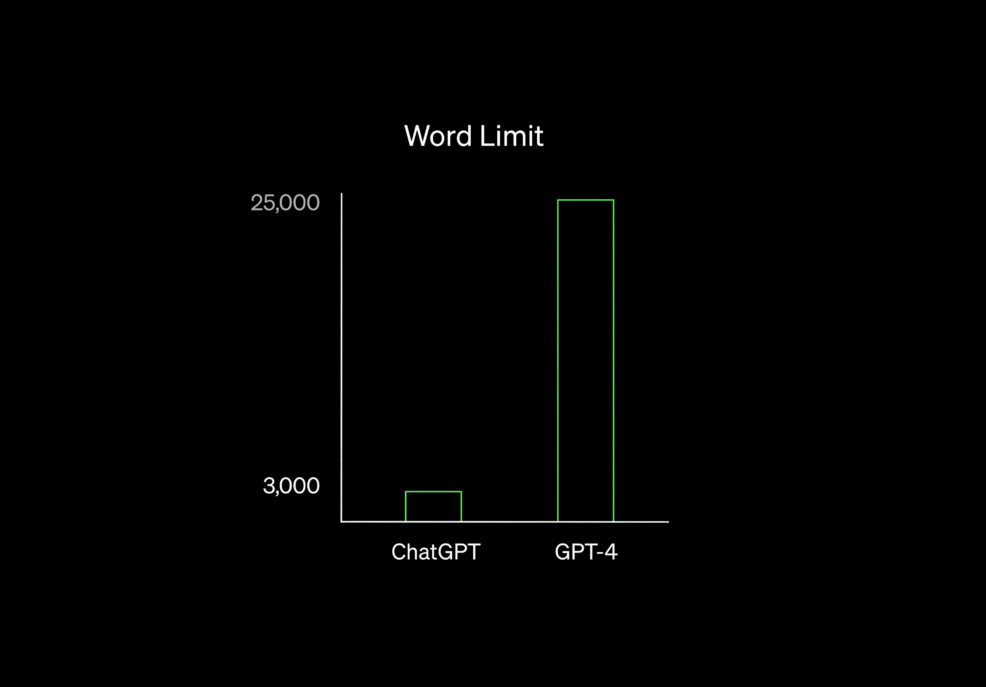

GPT-4 Also on Tuesday, OpenAI announced the release of Generative Pre-trained Transformer 4 (GPT-4). This follows ChatGPT, which launched in November 2022, and the earlier GPT-3 of 2020. In a major improvement over its predecessors, GPT-4 is now capable of accepting both text and image inputs. OpenAI reports that the updated technology can pass a simulated law school bar exam, with a score in the top 10% of test takers. By contrast, GPT-3.5 fell into the bottom 10%. GPT-4 can read, analyse or generate up to 25,000 words of text (vs 3,000 for GPT-3.5 and only 1,500 for GPT-3), or equivalent to a 50-page document. It also writes code in all major programming languages. OpenAI states that GPT-4 is "more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5." Working with visual information means that it can, for example, describe humour in unusual images, summarise screenshotted text, or answer exam questions that contain diagrams. In one particularly impressive example, Greg Brockman, OpenAI's President and Co-Founder, photographed a mockup sketch with his phone and then passed it through GPT-4's algorithm. In a matter of seconds, it had interpreted Brockman's handwritten text and produced all the HTML code and JavaScript needed for a fully functioning website. GPT-4 outperforms Chat GPT-3 by up to 16% on common machine learning benchmarks and is much better at multilingual tasks, which should make it more accessible to non-English speakers. Many safety and security concerns from the previous versions have also been addressed. "We incorporated more human feedback, including feedback submitted by ChatGPT users, to improve GPT-4's behaviour. We also worked with over 50 experts for early feedback in domains including AI safety and security," says OpenAI. "We spent six months making GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations." However, OpenAI has been criticised for maintaining a closed approach with regards to its technical details. The parameter count, for example, remains a mystery for now, as well as its hardware specifications. Sasha Luccioni, a research scientist at AI community Hugging Face, argues that the model is a "dead end" for the scientific community due to its closed nature, which prevents others from building upon GPT-4's improvements. Hugging Face co-founder Thomas Wolf says that with GPT-4, "OpenAI is now a fully closed company with scientific communication akin to press releases for products". OpenAI has explained that "the competitive landscape and the safety implications of large-scale models" are factors that influenced this decision. GPT-4 is publicly available in a limited form via ChatGPT Plus, with a subscription costing $20/month. OpenAI is also providing it to a select group of applicants through their API waitlist. Duolingo, an online service for learning languages, has also integrated GPT-4 into its application, though it currently works only for English speakers who are studying French or Spanish.

Midjourney 5 On Wednesday, San Francisco-based research lab Midjourney, Inc. released a major update of its image generation program, which shares the same name as the company itself. Midjourney uses AI to produce a seemingly infinite variety of images from user-entered prompts, similar to OpenAI's DALL·E and Stable Diffusion. Although still relatively new, having first launched in March 2022, it has already gone through numerous improvements. Last week's announcement takes it up to version 5.0. The company also announced the launch of a new physical, printed, Midjourney Magazine. Published monthly, it will feature community interviews alongside the best imagery and prompts. The first edition is free to anyone who uses promo code "subscriber" at the checkout, with subsequent issues priced at just $4 each. Like its predecessors, Midjourney V5 is only accessible through a Discord bot on the official Discord server, by direct messaging the bot, or by inviting the bot to a third-party server. The program isn't free and requires a paid subscription. These are in three tiers – Basic ($8/mo), Standard ($24/mo), and Pro ($48/mo). Once a user has access, they can simply describe the kind of image they want, via prompts entered in the Discord chat box. The AI then searches through its gigantic database of photographs and illustrations, merging different objects and themes to create the most suitable output, often with surreal results. V5 includes many improvements over V4, according to Midjourney's founder David Holz. It now features a much wider stylistic range, a doubling of resolution, higher levels of detail that are more likely to be accurate (human hands, for example, are now rendered correctly), and less unwanted text. Prompting has been enhanced too, with new options for tweaking the final results – seamless tiling, aspect ratios, and "weighing" of image prompts versus text prompts. But while its capabilities are truly impressive, Midjourney is proving to be controversial, with concerns over the use of artists' work. In January, three artists – Sarah Andersen, Kelly McKernan, and Karla Ortiz – filed a copyright lawsuit against the company, as well as Stability AI and DeviantArt, claiming they are infringing the rights of millions of artists by training AI tools on five billion images scraped from the web, without the consent of the original artists.

Microsoft 365 Copilot The final entry on our list is Microsoft 365 Copilot. This new AI assistant will become a feature for Microsoft 365 applications and services, which include its productivity suite of programs such as Word, Excel, PowerPoint, and Outlook. It uses OpenAI's GPT-4 model – already described earlier – combined with Microsoft Graph, to assist users in a range of tasks. Copilot has been compared to Microsoft's discontinued personal assistant known as "Clippy". It will clearly be orders of magnitude more capable than that earlier software tool, as shown in the video below. Copilot has revealed impressive results in testing. Data from the GitHub development platform indicates that 88% of users are more productive, 74% say that they can focus on more satisfying work, and 77% say it helps them spend less time searching for information or examples. "Today marks the next major step in the evolution of how we interact with computing, which will fundamentally change the way we work and unlock a new wave of productivity growth," said Satya Nadella, Chairman and CEO, Microsoft. "With our new copilot for work, we're giving people more agency and making technology more accessible through the most universal interface – natural language."

Comments »

If you enjoyed this article, please consider sharing it:

|