10th May 2016 Robotic diver can improve deep sea exploration Stanford University has revealed "OceanOne", an underwater robot with artificial intelligence and haptic feedback able to move around the seabed using thrusters.

Pictured here is OceanOne, a new underwater humanoid robot designed and built by scientists at Stanford University. Described as a "robotic mermaid", it is roughly five feet in length, with two fully articulated arms, a head featuring twin camera eyes, and a tail section housing batteries, computers and eight multi-directional thrusters. The machine can function as a remote avatar for a human pilot, diving to depths that would be too dangerous for most people. It is remotely controlled using a set of joysticks and its human-like stereoscopic vision shows the pilot exactly what the robot is seeing. It is outfitted with haptic force feedback and an artificial brain – in essence, a virtual diver. The pilot can take control at any moment, but usually won’t need to lift a finger. Sensors throughout the robot gauge currents and turbulence, automatically activating thrusters to keep the robot in place. And even as the body moves, quick-firing motors adjust the arms to keep its hands steady as it works. Navigation relies on perception of the environment, from both sensors and cameras, and these data run through smart algorithms that help OceanOne avoid collisions. If it senses that its thrusters won’t slow it down quickly enough, it can quickly brace for impact with its arms, an advantage of a humanoid body build. "OceanOne will be your avatar," says Oussama Khatib, Professor of Computer Science and Mechanical Engineering at Stanford. "The intent here is to have a human diving virtually, to put the human out of harm's way. Having a machine that has human characteristics that can project the human diver's embodiment at depth is going to be amazing."

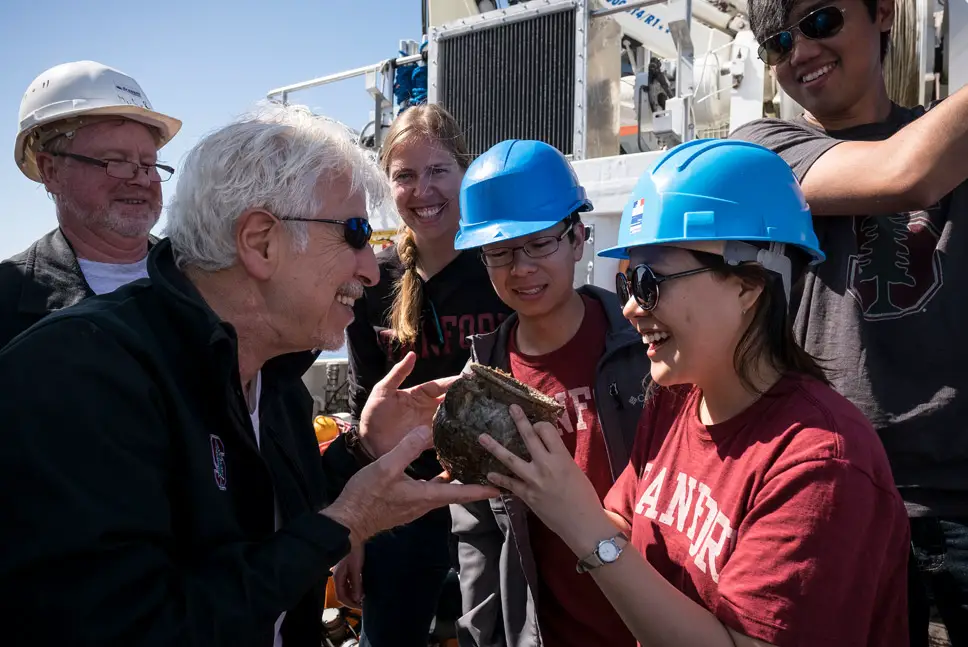

Indeed, Khatib recently used this robot to explore the wreck of La Lune, the flagship of King Louis XIV which sank in 1664. Located 20 miles (32 km) off the southern coast of France, 100 metres below the Mediterranean, no human had touched the ruins – or the many treasures and artifacts the ship had once carried – in the centuries since. With guidance from a team of deep-sea archaeological experts who had studied the site, Khatib spotted a grapefruit-sized vase. He was able to hover precisely over the vase, reach out, feel its contours and weight (via the haptic feedback) and stick a finger inside to get a better grip – all while sitting comfortably in a boat using joysticks to control OceanOne. The vase was placed gently in a recovery basket and brought back to the surface. When the vase returned to the boat, Khatib was the first person to touch it in hundreds of years. It was in remarkably good condition, though it showed every day of its time underwater: the surface was covered in ocean detritus, and smelled like raw oysters.

Click to enlarge

The expedition to La Lune was OceanOne's maiden voyage. Based on its astonishing success, Khatib hopes the robot will one day take on highly skilled underwater tasks, opening up a whole new realm of ocean exploration. "We connect the human to the robot in a very intuitive and meaningful way," Khatib said. "The human can provide intuition, expertise and cognitive abilities to the robot. The two bring together an amazing synergy. The human and robot can do things in areas too dangerous for a human, while the human is still there." Earth is not the only world in our Solar System with oceans. Perhaps in the distant future, machines like OceanOne could be used to virtually explore places like Europa, Enceladus or Titan, for example.

---

Comments »

|